This week we revisited AI in Education, and took a deeper dive. We looked at ways it is impacting classrooms directly now, and ways it could potentially impact them in the future. One facet is looking at how AI can be implemented to enhance the learning environment. This can be done through using it as a research tool or idea generator for inquiry based learning projects, having it generate practice problems on a subject students struggle with (and provide detailed feedback!), translate content in real time, and create graphics/images for students who don’t have an art background or desire to pursue it and still require quality imagery to supplement a project. Another example for teachers specifically is using it to generate basic lesson plans, of which teachers can go back in and modify as needed, which is a huge time saver.

One of the reflection prompts this week was on plagiarism. Specifically, what strategies can we employ as educators to minimize the risk of it when we allow students to use AI tools.

My response is that we should always educate students about copyright, fair use, and citing others work (how and why we do it). This is especially important in upper-level courses (where you could also potentially explore APA or MLA citation formats in addition to just listing websites or books) as these students may go on to post secondary, where proper citations are expected. This conversation naturally leads into “but what about AI generated works?” This is still a question that has not been fully answered, and I think as of now (November 2023), the stance you take on it as an educator may vary from person to person. For me, I think at a minimum my students should cite when they used AI language models, and how. Like I mentioned already, the reason for doing so is not just for them to not cheat, but because the content created by these machines inherently incorporates the words and thoughts of other people as it combs the internet to create a response. By not citing when you use ChatGPT, for example, you are not acknowledging the work that other people put into the piece it created.

It is impossible to give proper credit to each individual that contributed information that ChatGPT drew from, but as of now, I think just stating you used it, what prompt you used, and how you incorporated the information is enough.

Now you may be thinking, “well, that is nice and all, and I agree, but won’t students still cheat?” and to that, I would say yes. Some will. I would argue those students would still cheat no matter how good of a reason you gave them not to, though. Having a discussion with students openly about academic theft and integrity is a definite first step that will at least get many students thinking about the social and moral implications of using this tech improperly. Secondly to prevent cheating/plagiarism, I would have students use this tech for projects which require creative original work, in depth thought, self reflection, or a combination of the above. This minimizes how much they can cheat and have this tech write their whole piece for them. If you want students to write a more “fill in the answer” kind of piece, it would be best to have them do it with pen and paper in person in class, or use a computer with browser locks and supervision in class. Finally, you could consider using an “AI Checker” but this software is also unreliable and buggy, and tech changes way too fast for it to keep up with the sophistication of AI language models.

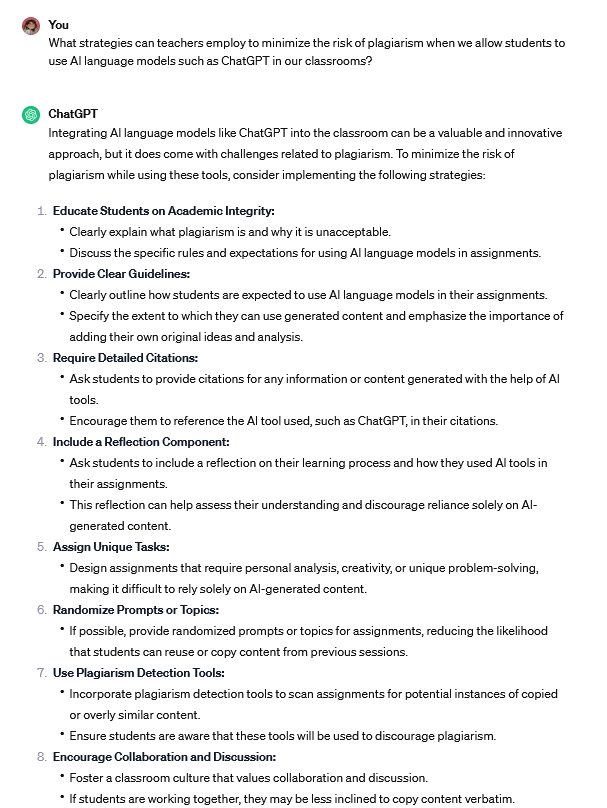

To close, I wanted to see what ChatGPT thought of this question… below is an image of our “conversation” (limited to the first 8 suggestions it gave me). Seems our “thoughts” are rather similar in a lot of ways!

Leave a Reply

You must be logged in to post a comment.